In this first blog post of mine, I will take you through a tutorial of how to scrape information on the internet. ‘Data Scientist’ is a new buzz word in the job market. So, we will be looking at data scientist jobs posted on Naukri, an Indian job search website. I will be coding this in Python and making use of the following libraries: (1) urllib2 and (2) BeautifulSoup. I have taken inspiration from Jesse’s blog who has done a similar analysis on indeed.com.

Lets start by reading the HTML page into an string. Next, we create a soup object using BeautifulSoup that forms a tree structure from the HTML, which is easy to parse. In the code below, I have specified the URL that corresponds to the category of data science or machine learning jobs on naukri.com.

# Specify Base URL base_url = 'http://www.naukri.com/machine-learning-jobs-' source = urllib2.urlopen(base_url).read() soup = bs4.BeautifulSoup(source, "lxml")

The basic idea once we have the soup object is to search for tags corresponding to each element in the HTML. We can look at the HTML source on the browser by Right Click-> Inspect. Inside each page, there are several hyperlinks and we first need to extract the ones that would redirect us to the job description page.

all_links = [link.get('href') for link in soup.findAll('a') if 'job-listings' in str(link.get('href'))] print "Sample job description link:",all_links[0]

Sample job description link: https://www.naukri.com/job-listings-Machine-Learning-Scientist-Data-Science-Premium-Jobs-Mumbai-3-to-4-years-261116002864?src=jobsearchDesk&sid=14801799685391&xp=1

Now we have a sample job description page (output link above). So we can crawl this page to get the HTML. Below, I shall explain how this is done for a single page, and I ‘ll provide the code to do it for all the pages corresponding to data science jobs.

jd_url = all_links[0] jd_source = urllib2.urlopen(jd_url).read() jd_soup = bs4.BeautifulSoup(jd_source,"lxml")

Next we extract the individual job level attributes like education, salary, skills required, etc. The key here is to identify the correct tag and extract the text enclosed within those tags.

# Job Location location = jd_soup.find("div",{"class":"loc"}).getText().strip() print location # Job Description jd_text = jd_soup.find("ul",{"itemprop":"description"}).getText().strip() print jd_text # Experience Level experience = jd_soup.find("span",{"itemprop":"experienceRequirements"}).getText().strip() print experience # Role Level Information labels = ['Salary', 'Industry', 'Functional Area', 'Role Category', 'Design Role'] role_info = [content.getText().split(':')[-1].strip() for content in jd_soup.find("div",{"class":"jDisc mt20"}).contents if len(str(content).replace(' ',''))!=0] role_info_dict = {label: role_info for label, role_info in zip(labels, role_info)} print role_info_dict # Skills required key_skills = '|'.join(jd_soup.find("div",{"class":"ksTags"}).getText().split(' '))[1:] print key_skills # Education Level edu_info = [content.getText().split(':') for content in jd_soup.find("div",{"itemprop":"educationRequirements"}).contents if len(str(content).replace(' ',''))!=0] edu_info_dict = {label.strip(): edu_info.strip() for label, edu_info in edu_info} # Sometimes the education information for one of the degrees can be missing edu_labels = ['UG', 'PG', 'Doctorate'] for l in edu_labels: if l not in edu_info_dict.keys(): edu_info_dict[l] = '' print edu_info_dict # Company Info company_name = jd_soup.find("div",{"itemprop":"hiringOrganization"}).contents[1].p.getText() print company_name

The attributes for the sample job description are shown in the output below:

Mumbai

We are looking for a machine learning scientist who can use their skills to research, build and implement solutions in the field of natural language processing, automated answers, semantic knowledge extraction from structured data and unstructured text. You should have a deep love for Machine Learning, Natural Language processing and a strong desire to solve challenging problems. Responsibilities : - Using NLP and machine learning techniques to create scalable solutions. - Researching and coming up with novel approaches to solve real world problems. - Working closely with the engineering teams to drive real-time model implementations and new feature creations.

3 - 4 yrs

{'Salary': u'Not Disclosed by Recruiter', 'Functional Area': u'Analytics & Business Intelligence', 'Industry': u'IT-Software / Software Services', 'Role Category': u'Analytics & BI', 'Design Role': u'Data Analyst'}

Machine Learning|Natural Language Processing|NLP|Research|Statistical Models|Big data|Statistical Modeling

{u'UG': u'Any Graduate - Any Specialization', u'Doctorate': u'Doctorate Not Required', 'PG': ''}

Premium-Jobs

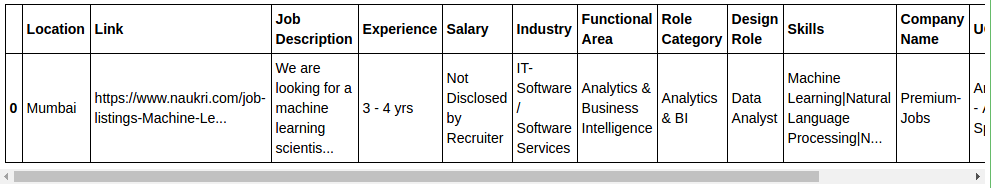

Each job posted on this website has job level attributes as shown above. So it makes sense to store them in a table like structure. The dataframe object from Pandas library is very handy in such a scenario. I will show you how we can create one with the information above.

import pandas as pd from pandas import DataFrame naukri_df = pd.DataFrame() column_names = ['Location', 'Link', 'Job Description', 'Experience','Salary', 'Industry', 'Functional Area', 'Role Category', 'Design Role', 'Skills','Company Name', 'UG','PG','Doctorate'] from collections import OrderedDict df_dict = OrderedDict({'Location':location, 'Link':all_links[0],'Job Description':jd_text,'Experience':experience, 'Skills':key_skills,'Company Name':company_name}) df_dict.update(role_info_dict) df_dict.update(edu_info_dict) naukri_df = naukri_df.append(df_dict,ignore_index=True) # Reordering the columns to a preferred order as specified naukri_df = naukri_df.reindex(columns=column_names) print naukri_df

We need to do this for all the data science job postings on the website. Lets first check the total number of machine learning jobs posted on the site. This information is present within the tag on the top of the page: div class=”count”.

num_jobs = int(soup.find("div", { "class" : "count" }).h1.contents[1].getText().split(' ')[-1]) print num_jobs

2890

So there 2890 job posting (at the time this blog was being written!). And each page has 50 jobs, which means the number of ‘listings’ pages that we need to crawl to eventually get the links to the final description pages are 58 (Calculated in the code snippet below).

import math num_pages = int(math.ceil(num_jobs/50.0)) print "URL of the last page to be scraped:", base_url + str(num_pages)

URL of the last page to be scraped: http://www.naukri.com/machine-learning-jobs-58

Now we need to put all of the above bits and pieces into one single function to extract info about all ML jobs on the site. I have provided a link to my github page where you can find the code as well as the complete IPython notebook for this blog. Towards the end of the IPython notebook, you would notice that I have used a library called cPickle. This enables us to save the naukri_df dataframe object as a pickle object, which can be directly loaded back into pandas for later use. It took about an hour’s time to scrape all of this information using my laptop.

In my next blog post, I shall use the data that we have extracted, to do some data analysis in order to gain some insight about the general data science job market in India.